Banking Scams Guide 2026

October 14, 2025

This article is part of "Eye on Fraud by IronVest," an ongoing series on banking fraud where we explore the latest banking scams and fraud impacting financial institutions worldwide.

Other articles in the series include:

Our banking scams guide walks you through the most common scams targeting financial institutions and their customers in 2026.

Whether it’s an NFL linebacker or one of the 2.6 million people who fell victim to financial scams last year, your customers, front-line staff, and internal teams are all feeling the pain of financial crime right now.

Yet scams aren’t new. As far back as 300 B.C., Greek merchants Hegestratos and Zenosthemis famously tried (and failed) to pull off an insurance scam.

What has changed dramatically over the last decade, and especially since the pandemic, is the scale, speed, and success rate of those attacks.

→ In 2023, just over a quarter (27%) of individuals who reported fraud experienced financial losses; by 2024, that share had climbed to 38%.

→ Bank transfers topped the list of payment-related losses last year, accounting for a staggering $2 billion in fraud.

The clear trend in fraud over the past decade is that as it becomes easier for customers to conduct their banking remotely, more attack vectors are opened up for fraudsters. With surging interest in from criminals, fraud rates will only keep rising.

Within days of OpenAI’s SORA 2 release, we saw some incredibly realistic deepfakes, many featuring OpenAI founder Sam Altman. A deepfake of Altman stealing GPUs became the most liked video on the SORA app.

With a large majority of people (69% according to 2025 studies) still convinced they can spot scams, including deepfakes, the present-day reality of deepfakes is becoming increasingly alarming.

All of this suggests that we’re entering what may be the most pivotal moment for the consumer banking industry in a generation.

Deepfakes are on the verge of mass weaponization, but there’s still time to get ahead of scammers using AI by completely evolving authentication to respond to AI-enabled attacks.

In this guide, you’ll find an up‑to‑date taxonomy of the 2025 banks scam landscape, complete with real‑world examples and the insights you need to stay ahead of tomorrow’s scams and protect your customers and your revenue.

Bank Scam Threats Every Financial Institution Must Track In 2025

In this section, we examine the current banking scam landscape, highlighting the fraudulent schemes and banking scams we’re seeing in the field, the customer segments most at risk, the attack vectors fraudsters exploit, and where existing solutions succeed or fail against each threat.

Impersonation Scams

In impersonation scams, attackers pretend to be trusted individuals (e.g., executives, customers, or bank staff) to trick targets into authorizing fraudulent transactions.

CEO Fraud (BEC)

Business Email Compromise (BEC), or CEO fraud, is when attackers impersonate a high-level executive (often the CEO or CFO) to trick finance employees into making unauthorized wire transfers.

A recent FBI Internet Crime Report shows a since 2022.

Primary targets

Internal: Bank employees.

External: Business banking customers.

How it works

Attack vectors: Social engineering, spoofed emails.

Tactics: Urgent tone, well-researched details, spoofed domains.

Current solution challenges

Current solutions to CEO fraud include out-of-band verification or payment fraud models trying to identify anomalies in the transaction, but their efficiency is Low.

CEO fraud transactions are effectively legitimate transactions and are unlikely to be flagged in a fraud detection system.

Customer Impersonation

Customer impersonation fraud is when a scammer poses as a legitimate bank customer to gain unauthorized access to an account, request a funds transfer, or reset login credentials.

Impersonation scams have increased as scammers become more adept at utilizing AI for fraud attempts, and existing controls struggle to keep pace.

Banks risk reputational damage and regulatory scrutiny if they fail to stop these attacks.

Primary targets

Internal: Call center agents, branch staff, online support teams.

How it works

Attack vectors: Social engineering, spoofed phone numbers, stolen PII, and AI-generated voice cloning.

Tactics: Leveraging personal data to answer security questions, using deepfake voices to trick voice-ID systems.

Current solution challenges

Current solutions to customer impersonation include Multi-Factor Authentication (MFA) & One-Time Password (OTP), but their efficiency is Low.

MFA and OTPs often prove inadequate in blocking unauthorized access.

Fraudsters carry out SIM‐swap attacks or exploit SMS‐interception vulnerabilities to intercept one-time codes, and they use real-time social-engineering tactics (for example, calling customers pretending to be their bank) to trick them into sharing their OTPs.

Meanwhile, “MFA fatigue” can desensitize users, who habitually approve fraudulent login requests without a second thought. AI-powered voice cloning further enhances these banking fraud schemes by convincingly mimicking legitimate callers to extract codes.

In many cases, attackers armed with stolen personal data can pre-validate their identity, effectively sidestepping the triggers that would invoke MFA.

Family/Friends Impersonation

Family/friends impersonation scams occur when fraudsters impersonate someone the victim knows, typically a family member or close friend, and request “urgent” financial assistance.

In this scam, fraudsters typically send their victim a text or social media message starting with “Hi Mom/Dad, this is my new/temporary number…” and then request help. Alternatively, the scammer may call their victim from a spoofed number.

A related variation, sometimes called a “grandparent scam,” targets older victims, with the fraudster pretending to be a grandchild in distress (for example, needing bail money or help while traveling).

Banks face risk here because victims often make instant, authorized payments that are difficult to reverse once they’re sent.

Primary targets

External: Individual consumers, particularly older adults and/or parents and grandparents.

How it works

Attack vectors: Messaging apps, social media, email, and spoofed caller IDs.

Tactics: Fraudsters contact victims, pretending to be a loved one in difficulty, often claiming to have lost their phone, been in an accident, or to be in urgent need of money.

Current solution challenges

Current solutions to family or friends impersonation include customer education and payment verification prompts, but their efficiency is Low.

Education campaigns prompt customers to “pause and verify,” but in the middle of an emotional situation, rational decision-making is often replaced by urgency and fear.

Payment warnings or confirmation screens are similarly rarely helpful, as victims genuinely believe they’re helping a loved one.

Once the victim makes a payment, funds are transferred quickly across accounts or into cryptocurrency, making recovery extremely difficult or impossible.

Bank Employee Impersonation

Bank employee impersonation involves fraudsters posing as legitimate bank representatives to convince victims to enable an illegitimate transaction, such as sending money from their account to a fraudster.

A is currently affecting US banks and their customers.

An Ohio retail banking client recalled receiving an email almost indistinguishable from Wells Fargo’s official communications, asking her to verify her debit card information. Within minutes of clicking the link, $3,000 vanished from her account.

Primary targets

External: Consumers, particularly older adults.

How it works

Attack vectors: Pop-up ads, unsolicited phone calls, and emails spoofing official communications.

Tactics: Urgency or fear appeals (“Your account is compromised!”), believable bank impersonations, and step-by-step instructions that lead victims to install malware or relay credentials.

Current solution challenges

Current solution to bank employee impersonation is customer education, but its efficiency is Low.

Many consumers, especially older adults, struggle to absorb and retain one-off warnings that come in the form of email alerts and branch flyers. At the same time, fraudsters continually improve their tactics with more convincing bank impersonation scams.

Educational materials rarely reach all at-risk segments, and even when they do, the urgency and fear invoked by a well-timed spoofed call or email can often override the caution previously learned.

As a result, awareness campaigns alone can’t keep pace with the evolving sophistication and immediacy of these attacks, leaving customer education an inadequate line of defense.

Social Engineering

Most current fraud defenses place the burden on consumers themselves. Typical approaches include sending scam awareness links or issuing generic transaction warnings.

Yet these measures rarely succeed in meaningfully changing user behavior. Worse, they often introduce unnecessary friction and, in some cases, even increase vulnerability. By highlighting certain risks while overlooking others, they can create blind spots.

They also open new avenues for fraudsters. For example, by enabling fake “scam awareness” texts or spoofed confirmation links.

Social engineering scams thrive on these weaknesses. By exploiting human psychology (such as trust, fear, or urgency), fraudsters can sidestep technical safeguards and persuade victims to act against their own best interests.

Authorized Push Payment (APP) Fraud

APP fraud occurs when an individual is deceived or coerced into willingly authorizing a real-time payment to an account controlled by a fraudster.

In markets where instant payments are common, such as the United Kingdom, APP fraud accounts for almost 40% of all fraud, with banks being forced by regulators to reimburse consumers for all the losses they sustain.

Primary targets

External: Consumers using instant payments.

How it works

Attack vectors: Sophisticated social engineering, deepfakes/AI, and direct exploitation of end-to-end instant payment systems.

Tactics: Fraudsters pose as legitimate payees or service providers, creating a sense of urgency that convinces victims to authorize payments immediately.

Current solution challenges

Current solution to APP fraud is customer education, but its efficiency is Low.

Despite extensive awareness campaigns from banks (e.g., email advisories and in-app warnings), customer education remains ineffective in combating APP fraud.

Victims under pressure and time constraints often overlook or forget general safety tips, especially when faced with very convincing deepfake calls or urgent messages that mimic trusted financial institutions.

Additionally, educational materials struggle to keep pace with fraudsters’ evolving social-engineering tactics.

Romance Scams

Romance scams involve a scammer building a fake emotional relationship with a victim over time. They might pretend to be someone’s boyfriend or girlfriend, eventually persuading them to send money or invest in fraudulent schemes.

Once trust is built, victims might authorize large payments to the scammer for dubious reasons.

Your customers, especially elderly ones, face real risk from these kinds of scams. The FBI received 17,910 complaints about confidence fraud/romance scams in 2024.

Primary targets

External: Bank customers and consumers.

How it works

Attack vectors: Social engineering, fake identities, messaging/dating apps.

Tactics: Building emotional rapport, sharing personal stories, promising future repayment or investment returns, and requesting funds for medical bills and similar.

Current solution challenges

Current solution to romance scams is customer education, but its efficiency is Low.

Banks’ efforts to educate customers about romance fraud often feel generic and struggle to break through the emotional bond scammers establish with their victims over weeks or months.

Victims convinced they’re in a genuine relationship tend to dismiss or rationalize standard safety tips, and educational materials seldom address the nuanced grooming tactics or emotional vulnerabilities at play.

By the time a customer considers wiring funds, they’re too invested in their “relationship” to remember generic “stay alert” advice.

Pig Butchering Scams

Pig butchering scams are similar to romance scams, but more focused on investments.

The victim in this scam is lured into making financial transfers towards an “investment” of some kind, which looks (to them) legitimate, often via a trusted intermediary. The scammer then vanishes once the final, large deposit is made.

Cryptocurrency is enabling this kind of fraud on a massive scale. A CryptoCore campaign in 2024 leveraged deepfake videos to steal nearly $4 million through over 2,000 transactions.

Primary targets

External: Retail investors, crypto-curious individuals, and anyone enticed by high-return opportunities.

How it works

Attack vectors: Social engineering, fake cryptocurrency platforms, deepfake videos, messaging apps, and romance-style grooming tactics.

Tactics: Scammers establish trust through staged success stories, offer small initial gains, then press for larger capital injections once confidence is secured.

Current solution challenges

Current solution to pig butchering scams is customer education, but its efficiency is Low.

Generic warnings about investment scams often fail to resonate with victims who have already seen small, believable returns and feel personally connected to their “advisor.”

Educational materials can’t replicate the one‐on‐one trust-building that scammers cultivate, nor can they fully anticipate every new deepfake video or counterfeit trading site.

By the time a customer suspects they've been scammed, they’ve often already made multiple transfers to fraudsters.

Marketplace Scams

Marketplace scams occur on online marketplaces (e.g., Facebook Marketplace) or peer-to-peer platforms (e.g., Etsy), where fraudsters pose as buyers or sellers.

Fraudsters trick victims into sending money for non-existent goods or purchasing legitimate items with fraudulent payments.

In some marketplaces, scams make up as much as . Banks face risk here because customers who fall victim are likely to try to reverse charges and pass on their losses.

Primary targets

External: Individual consumers using platforms and P2P payment apps.

How it works

Attack vectors: Fake product listings, payment reversals, social engineering, overpayment scams, and impersonation of trusted platforms or buyers.

Tactics: Posting too-good-to-be-true deals, pushing buyers to complete transactions off-platform, sending counterfeit payment confirmations, and reversing or disputing payments after receiving goods.

Current solution challenges

Current solution to marketplace scams is customer education, but its efficiency is Low.

Broad “beware of scams” messages from financial institutions can’t compete with the immediacy of a tempting offer, leaving education alone as an ineffective defense.

Buyers busy chasing the perfect deal will not, in most cases, remember generic safety advice, and, as is the case with some of the other scams mentioned above, warnings can’t keep pace with ever-changing scam tactics or every niche platform.

Educational materials seldom appear at the exact moment of the transaction, and once a payment is made, reversing it becomes a lengthy and often complicated process.

Guided Scams

Guided scams are a type of scam where fraudsters pose as helpful advisors or support personnel, tricking banking customers into performing fraudulent transactions in real-time.

Fraudsters typically “guide” victims step-by-step (by phone, chat, or remote access) into sending money to the scammer’s account or a mule account. Since the victim authorizes the payment themselves, these scams are harder for banks to detect and reimburse.

In one real-world example, a victim was tricked by a fake “bank representative” into transferring $1.6 million to a “segregated client account” for a term deposit. The money was quickly laundered through multiple accounts.

Primary targets

External: Individual consumers, particularly those who naturally trust authority figures like bank staff.

How it works

Attack vectors: Phone calls, messaging apps, remote desktop tools, fake bank or government websites, investment platforms, and impersonation of legitimate organizations.

Tactics: Convincing victims to install remote-access software or transfer funds to scammers’ accounts themselves. Victims often think they’re resolving a problem. Scammers tend to maintain real-time communication with the victim during the transaction to ensure they follow the instructions.

Current solution challenges

Current solutions to guided scams include customer education and transaction monitoring, but their efficiency is Low.

Though customer education can help raise awareness about guided scams, these scams manipulate victims in real-time and exploit trust under pressure, making preventive messages ineffective in the moment.

Transaction monitoring tools also struggle with guided scams, as the payment is authorized by the customer.

By the time the victim realizes that they’ve been scammed, the funds are typically unrecoverable, having been moved through multiple mule accounts or converted into crypto within minutes.

Deepfake/AI-enabled Scams and Fraud

Advancements in AI technology enable criminals to generate convincing audio and video deepfakes that can deceive both individuals and systems during live interactions.

Voice Cloning for Authorizations

“My voice is my password” is not safe.

Voice cloning is an emerging fraud tactic in which attackers leverage AI-generated synthetic speech to impersonate a customer’s voice, bypassing voiceprint or spoken-phrase authentication checks.

A 15-second audio sample is now all that is needed to replicate someone's voice. Synthetic audio is so advanced that generative AI companies, such as OpenAI, are against using voice as an authentication solution.

Primary targets

Internal: Bank contact centers and IVR (interactive voice response) systems relying on voiceprint or spoken phrase authentication.

How it works

Attack vectors: Theft of voice samples from sources such as voicemail, social media videos, or customer service recordings, as well as advanced AI-voice synthesis tools, spoofed caller IDs, and replay attacks.

Tactics: Fraudsters feed a 15-second (or even shorter) audio clip into a voice cloning engine, then place calls to banks that mimic legitimate customers’ tone, cadence, and passphrase responses.

Current solution challenges

Current solution to voice cloning for authorizations is voice biometrics, but its efficiency is Low.

Voice-biometric systems struggle to keep up with voice cloning technologies that are continuously advancing.

These solutions rely on detecting subtle quirks in pitch, cadence, or pronunciation, but AI-generated voices can reproduce those same nuances, rendering anti-spoofing measures almost obsolete.

Background noise, call quality, and microphone differences further complicate the acoustic fingerprint, leading to both false rejects and false accepts. And because voiceprints can’t be “reset” once compromised, a single leak of audio data can permanently undermine the system’s integrity.

Deepfake Video Injection Scams

Deepfake video injections are a scam in which fraudsters use AI-generated or AI-manipulated videos (often paired with synthetic audio) to impersonate individuals during video calls or video-based identity verification processes.

In the past 12 months, we’ve seen multiple cases of enterprises inadvertently hiring North Korean spies using deepfakes to pass interview screenings. The same advanced technology could be used to compromise your bank right now.

Primary targets

Internal: Banks using video-based KYC/onboarding, remote identity verification vendors, and internal staff targeted via video conferencing (e.g., finance or HR teams).

How it works

Attack vectors: AI-generated video avatars, manipulated live video feeds, synthetic video ID documents, and real-time deepfake overlays during Zoom or Teams calls.

Tactics: Presenting a cloned face and voice to pass identity checks or submitting fraudulent video IDs that appear authentic.

Current solution challenges

Current solution to deepfake video injection scams is face biometrics, but its efficiency is Low.

Although there is a growing deployment of face-biometric systems in video KYC, these solutions hinge on matching pixel-level features and basic liveness cues, both of which high-quality deepfakes can convincingly reproduce.

Modern AI models can replicate blinking patterns, subtle head movements, and even lighting variations to pass standard liveness checks, making it impossible for the algorithms to distinguish between a real person and a synthetic overlay reliably.

AI Impersonation Scams

Impersonation scams that are enhanced with AI tools, like voice cloning or deepfake video, are becoming increasingly popular.

In these scams, criminals may use just a few seconds of someone’s recorded voice (from social media or voicemail) to create a realistic audio message asking for help or payment.

In one real-world case, a 73-year-old woman narrowly escaped falling victim to an AI-enhanced “grandparent scam” after receiving a call from someone who sounded exactly like her grandson, claiming to be in jail and needing bail money. She and her husband withdrew thousands of dollars before a bank manager warned them that this might be a scam.

For example, a victim might receive a phone call from what sounds exactly like their child or employer, urgently requesting funds for an “emergency” or “business deal.”

Since these scams often result in authorized payments made under false pretenses, they are very difficult to reverse or recover.

Primary targets

External: Individual banking customers and employees with payment authority (for example, finance staff or executives).

How it works

Attack vectors: Phone calls, video calls, voice notes, social media, and messaging apps.

Tactics: Fraudsters use AI-generated voice or video to impersonate trusted individuals, creating convincing stories about emergencies, time-sensitive payments, or verification requests.

Current solution challenges

Current solutions to AI impersonation scams include customer awareness campaigns and payment verification checks, but their efficiency is Low.

Although education encourages banking customers to verify before making a payment transfer, AI-generated voices and videos are often difficult to detect in the moment. What usually happens is that emotional manipulation and perceived urgency override suspicion.

Synthetic/Stolen Identity Scams

In synthetic/stolen identity scams, criminals either fabricate entire personas or hijack real ones and then leverage them to open bank accounts, apply for credit, or commit fraud.

Synthetic Identity Fraud

Synthetic identity fraud occurs when criminals create a fake identity by combining real and fake personal information, then use it to defraud a bank.

Fraudsters may combine a legitimate Social Security number (obtained through a data breach) with a fabricated name and date of birth. The fake identity is then used to open bank accounts, apply for credit, or access services.

Synthetic identity fraud rates increased by 60% last year. In the same period, there was a 173% increase in synthetic voice calls.

Synthetic identity fraudsters now behave like real customers for months (e.g., making small repeat payments into accounts), build up credit, and then default on large loans or use accounts for mule activity or fraud.

Primary targets

Internal: Banks’ onboarding systems, credit issuing platforms, and KYC identity verification processes.

How it works

Attack vectors: Fake documents, manipulated ID photos, synthetic SSNs or national IDs, and “seasoned” identities with real credit history.

Tactics: Fraudsters make small, regular deposits and payments to establish a solid credit footprint before requesting large loans, defaulting, or routing funds through mule accounts.

Current solution challenges

Current solution to synthetic identity fraud is identity verification (IDV), but its efficiency is Medium.

Identity-verification (IDV) solutions can protect banks against entirely fabricated documents, flagging poorly forged IDs, and matching data against authoritative sources. But they struggle to catch “seasoned” synthetic identities.

Because fraudsters mix genuine SSNs or credit histories with their fake personas, these profiles often pass real-time database and document checks without raising red flags.

Stolen Identity Misuse Fraud (Identity Theft)

Stolen identity misuse fraud is a type of fraud that occurs when a criminal uses someone’s real personal information, such as their name or credentials, without consent to open accounts, apply for credit, or take over existing services.

Identity fraud is becoming extremely common. Every year, almost 1 in 10 (9%) of US residents age 16 or older experience identity theft.

Primary targets

External: Existing customers whose data has been exposed.

Internal: Onboarding systems and fraud prevention tools that rely on static identity data.

How it works

Attack vectors: Data breaches, phishing, credential stuffing, information sold on the dark web, and loopholes in account recovery processes.

Tactics: Resetting passwords with stolen credentials, impersonating victims in KYC checks, or social-engineering support staff to update contact details and bypass MFA.

Current solution challenges

Current solution to stolen identity misuse fraud (identity theft) is identity verification (IDV), but its efficiency is Medium.

ID checks can spot fake documents and match your data against trusted lists, but they can’t tell when someone is using real, stolen information.

How Legacy and Challenger Banks Can Prevent Dangerous Banking Scams

Understanding and classifying bank scams is key to preventing them.

From BEC scams and instant‑payment APP fraud to AI‑driven deepfakes and synthetic identity schemes, the breadth and sophistication of bank scams demonstrate just how quickly scammers and fraudsters are innovating.

Keeping these fourteen fraud categories top of mind and recognizing how they evolve with new technologies will be essential for accurately gauging the true scope of 2025’s banking‑fraud landscape.

Prevent Dangerous Banking Scams With IronVest’s ActionID

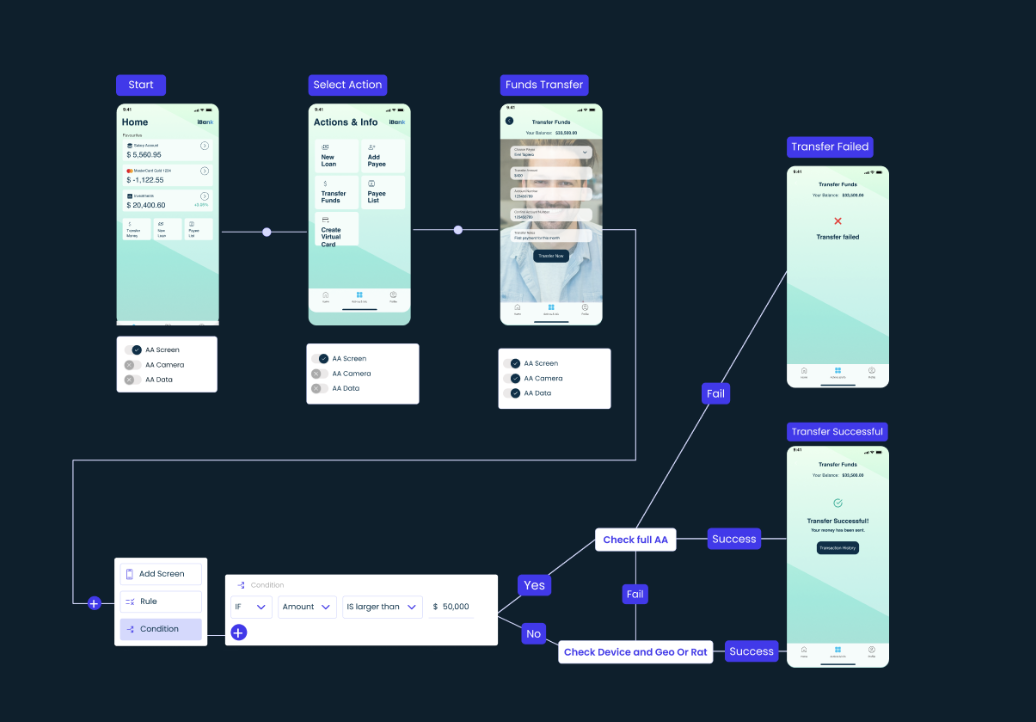

ActionID is Ironvest’s continuous authentication solution.

It works by creating a continuous biometric link between a person and their account. The link is boolean, so the person is either who they say they are or not.

There is no room for identity impersonation with Authentic Action. Our solution is designed to combat the banking scams on this list and remain resistant to deepfake technology.

What’s remarkable about Authentic Action is that it is not only safer than alternative solutions on the market, but it is also legitimately easier to use for the end user. There is no need for 2FA codes, push notifications, or any other kind of in-session interruption.

Authentication is continuous and, critically for today’s banks, private.

Get a demo of IronVest today to see how your bank can roll out reliable, private, and future-proof protection against banking scams.